Uncertain Times: TikTok, A New Administration, & Brad Pitt AI Scams

News at the intersection of justice & AI. The week of January 20th, 2025. Issue 44.

If you prefer not to read the newsletter, you can listen instead!👂 The Just AI podcast covers everything in the weekly newsletter and a bit more. Have a listen, and follow & share if you find it to be helpful!

Understanding the impact of Human-AI interaction on discrimination (European Commission)

TikTok is One Step Closer to Being Banned in the US (CNN)

The World’s First AI Chatbot (ELIZA) Has Finally Been Resurrected After Decades (New Scientist)

*Paige works for GitHub, a Microsoft Company

Anthropic achieves ISO 42001 certification for responsible AI

Anthropic is among the first AI labs to earn ISO/IEC 42001:2023 certification, highlighting its commitment to responsible AI development. This international standard guides organizations toward ethical, secure, and accountable AI practices through comprehensive risk management, rigorous testing, transparency, and oversight through a rigorous analysis of the organization, examination of their systems and processes, and implementation of management and controls for AI.

The certification, issued by Schellman Compliance, builds on Anthropic’s Responsible Scaling Policy, the risk governance framework Anthropic uses to mitigate risks from frontier AI systems, Constitutional AI, their system for training AI to monitor AI for risks, and their safety research initiatives.

Details on the certification and its implications for AI governance are available on Anthropic's Trust Center. This achievement underscores the company’s leadership in AI safety.

👉 Why it matters: Anthropic is sticking to their mission, which is saying something in a year when tech companies are backing out of their commitments to safety, diversity, and sustainability efforts. Anthropic has always billed itself as an AI lab committed to responsibly building, using, and deploying AI, and their decision to get this certification at a time when other organizations are adjusting, not only shows that Anthropic was serious when they said they were on the side of safe AI, but that they’re committed to their mission even when political and social winds change. Responsible AI rating: ✅

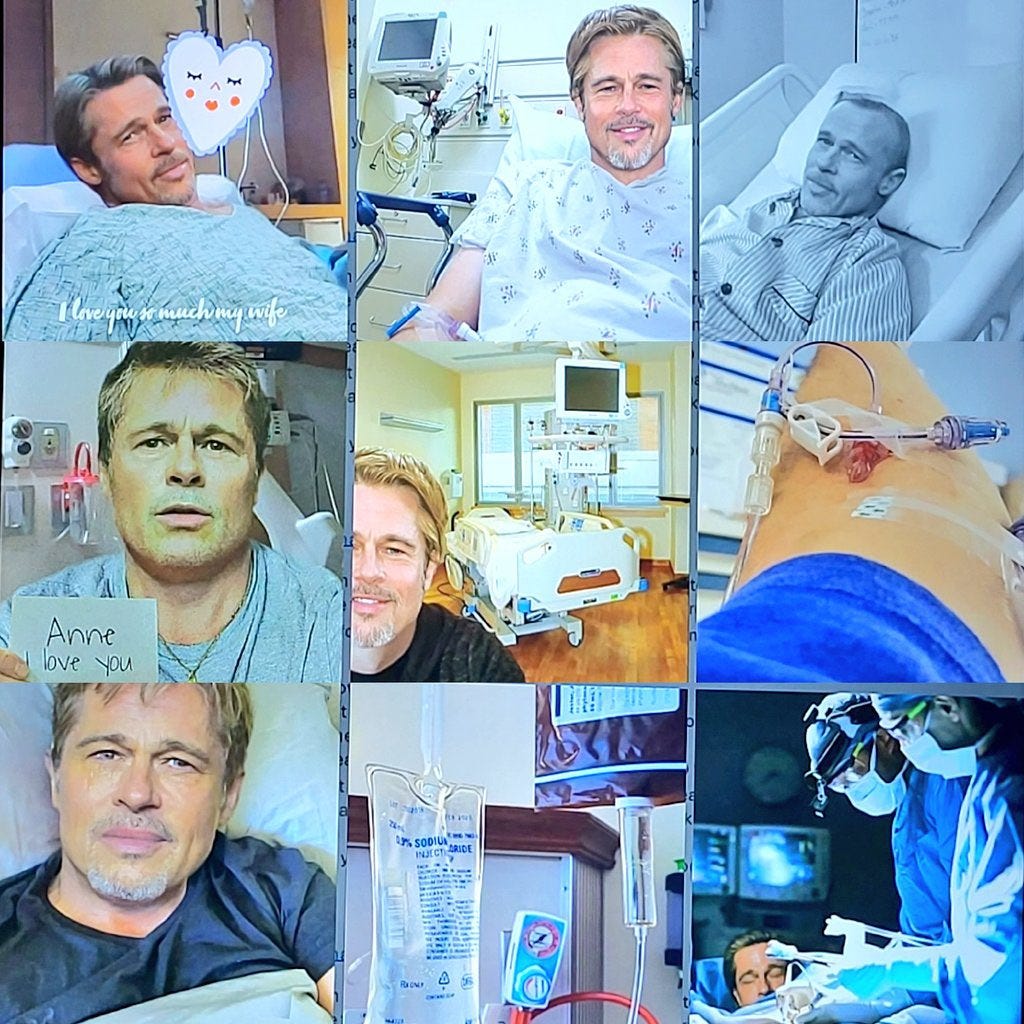

French Woman Loses Nearly $1M Through Scam Using AI Photos of Brad Pitt

A French woman lost €830,000 ($850,000) after scammers posing as Brad Pitt conned her through AI-generated photos and fake news reports. The 53-year-old interior designer shared her story on French TV and YouTube, highlighting her doubts and vulnerability as someone unfamiliar with social media, and someone who was going through a divorce at the time. The ordeal led to her financial ruin. While she now knows it’s a scam, the woman defended her actions, emphasizing how AI tools made the scam convincing. A representative for Brad Pitt stated, “It’s awful that scammers take advantage of fans’ strong connection with celebrities, but this is an important reminder to not respond to unsolicited online outreach, especially from actors who have no social media presence.” The French Authorities have launched a probe into the scam in an effort to recover some of her assets. Responsible AI rating: 🚩

👉 Why it matters: Those who are digitally literate may be asking “how did this happen?” but the reality is that the internet is laden with individuals who are vulnerable to scams, and the scams being carried out are becoming more elaborate and sophisticated than ever. As the world moves into new and unknown realms of possibility with AI, that means that scammers have access to new tools and will use any means necessary to manipulate people out of money.

Apple Suspends AI-Generated News Alerts Until Further Notice

Apple has paused its AI-generated news alerts after repeated errors, including false headlines attributed to news outlets. The service, integrated into news apps, faced backlash from media organizations like the BBC for spreading misinformation, such as inaccurately reporting a suicide. Apple acknowledged the issue, promising improvements in future updates stating, “We are working on improvements and will make them available in a future software update.” Journalistic groups, including Reporters Without Borders, warned of the risks AI poses to accurate news delivery stating, “Innovation must never come at the expense of the right of citizens to receive reliable information.” Critics highlighted the dangers of AI "hallucinations" and their impact on public trust. This rare move by Apple underscores the challenges of balancing innovation with reliability in AI-driven tools. Responsible AI rating: ✅

👉 Why it matters: Mis and disinformation are already major global concerns in the digital age, and those concerns are fueled further by the rise of AI, which makes is easer to spread inaccurate stories that can damage the public. Usually the concern for mis and disinformation centers squarely on states that want to control a narrative, or other bad actors focused on causing mayhem. Because this is a reputable tech brand with wayward technology, it brings the spotlight on mis and disinformation in a new way. This is a look at what happens when companies rush to get technology out the door without conducting proper safety checks, or safety checks over time.

OpenAI Releases the AI in America Economic Blueprint

OpenAI has unveiled an Economic Blueprint advocating for policies to secure America’s leadership in AI innovation. As political tides change, it’s becoming increasingly common for organizations to provide a public point of view on topics related to the future of innovation and tech policy. OpenAI’s recommendations focus on expanding AI infrastructure, creating jobs, and implementing nationwide regulations to ensure safety and equitable access. OpenAI warns of risks if the US fails to act, including losing investment to global competitors like China. The Blueprint also highlights AI’s potential to drive economic growth and re-industrialization across communities. As part of its efforts, OpenAI will launch the “Innovating for America” initiative on January 30 in Washington, D.C., promoting collaboration between policymakers and the tech industry to shape the future of AI.

👉 Why it matters: The future of AI is uncharted territory, and while leading companies in AI liken the AI era to other major times of innovation in the US, the comparison can only go so far, especially given the differences in scale, globalization, automation and digitalization. One significant item of note is that both OpenAI and Microsoft’s recommendations lead with the assumption and intention of prioritizing the leadership of the US in AI. This is largely because these companies will be heavily impacted by any AI regulation that comes to fruition at both the federal and state levels. How large tech companies in the US engage with the incoming administration will have a massive impact on how the future of AI is shaped, and whether the US can lead in this space for years to come.

Perspectives on the New Trump Administration’s AI Impact Plan

As the Trump administration prepares to re-enter the White House, its AI strategy is taking shape. Central to the plan seems to be rescinding Biden’s 2023 Executive Order on AI, which Trump has criticized as hindering innovation. Instead, the administration is signaling that it will prioritize deregulation, aiming to foster private-sector growth and maintain U.S. leadership in AI. Key appointees, including Michael Kratsios (OSTP) and David Sacks (AI & Crypto Czar), signal a focus on free-market principles.

Trump’s AI priorities echo his first term’s American AI Initiative, which emphasized research investment, workforce development, and public-private partnerships. However, critics noted a lack of sufficient funding. In the coming term, it seems likely that Trump will expand AI’s role in national security, tightening export restrictions and boosting defense-related AI, such as cybersecurity and autonomous systems.

The impact of these policies will depend on balancing rapid growth with ethical considerations and ensuring America’s continued AI dominance.

👉 Why it matters: While the administration’s deregulatory approach could accelerate innovation, it raises concerns about oversight, algorithmic bias, and safety. Trump’s strategy contrasts Biden’s emphasis on ethical standards and responsible innovation, but it’s clear that both Trump and Biden prioritize American leadership in AI. While a lot remains to be seen, there is reason to believe that the incoming administration will prioritize innovation and AI global dominance over AI safety, which means that the US could go another four years without any meaningful, national regulation on the technology. Time will tell, but as with any incoming administration, the decisions around AI advancement, safety and policy should be monitored.

Spotlight on Research

A Generative Model for Inorganic Materials Design

Here, we present MatterGen, a model that generates stable, diverse inorganic materials across the periodic table and can further be fine-tuned to steer the generation towards a broad range of property constraints. Compared to prior generative models 4,12, structures produced by MatterGen are more than twice as likely to be novel and stable, and more than 10 times closer to the local energy minimum.

Understanding the Artificial Intelligence Diffusion Framework

This paper provides analysis of the U.S. Framework for Artificial Intelligence Diffusion, issued in January 2025, which is a framework for managing the global diffusion of advanced artificial intelligence (AI) capabilities through U.S. export controls. Given the framework’s significance for U.S. AI policy, this paper summarizes and provides analysis of the main features of the framework to help policymakers and stakeholders understand its mechanisms, strategic rationale, and potential impact

Watch: Meta’s AI Persona Troubles

Let’s Connect:

Find out more about our philosophy and services at https://www.justaimedia.ai.

Connect with me on LinkedIn, or subscribe to the Just AI with Paige YouTube channel to see more videos on responsible AI.

Looking for a speaker at your next AI event? Email hello@justaimedia.ai if you want to connect 1:1.

How AI was used in this newsletter:

I personally read every article I reference in this newsletter, but I’ve begun using AI to summarize some articles.

I DO NOT use AI to write the ethical perspective - that comes from me.

I occasionally us AI to create images.