This week in AI we’re seeing increasingly creative uses of generative AI, and the release of an AI-generated Drake/The WKND inspired songs sent shocks through the music industry.

In fashion, designers and brands are now turning to generative AI to create digital models, leading some in the fashion industry to ask questions about the future of humans in an industry as old as time.

With all of these industry-spanning uses of AI, it seemed like a good time to get back to the basics of AI ethics - what they are, why we need them and how we move forward with implementation - to better understand how we can govern.

At least as far back as Isaac Asimov and his three laws of robotics, people have been looking for a way to govern technology so that it doesn’t negatively impact the lives of humans. These days, in what has been dubbed “the AI Era,” we’re seeing people talk about “responsible AI” and “ethical AI,” and using terms like “based” and “woke” (however misguided) to draw distinctions that are easier to understand. How we navigate this moment in technical innovation and, indeed, in history, is not easily understood, but is absolutely critical for humanity. In an effort to find some stability in these new waves of innovation, people are asking the ultimate question: what is the best way to govern AI?

The answer, much like politics, is “it depends.” To get to the root of how we govern, we have to dig deep into what we value and let that lead us to our methodology. So, let’s start with the basics of how we approach governance by establishing some key basics.

What’s the difference between ethical AI and responsible AI?

Both of these terms have changing definitions and are used interchangeably in many cases. Because AI is relatively new in application, the definitions surrounding it continue to grow and change to accommodate the innovation of the technology. This will continue until the tech has settled a bit. In my work it’s become necessary to differentiate between responsible AI and ethical AI so that we can operate from a shared language.

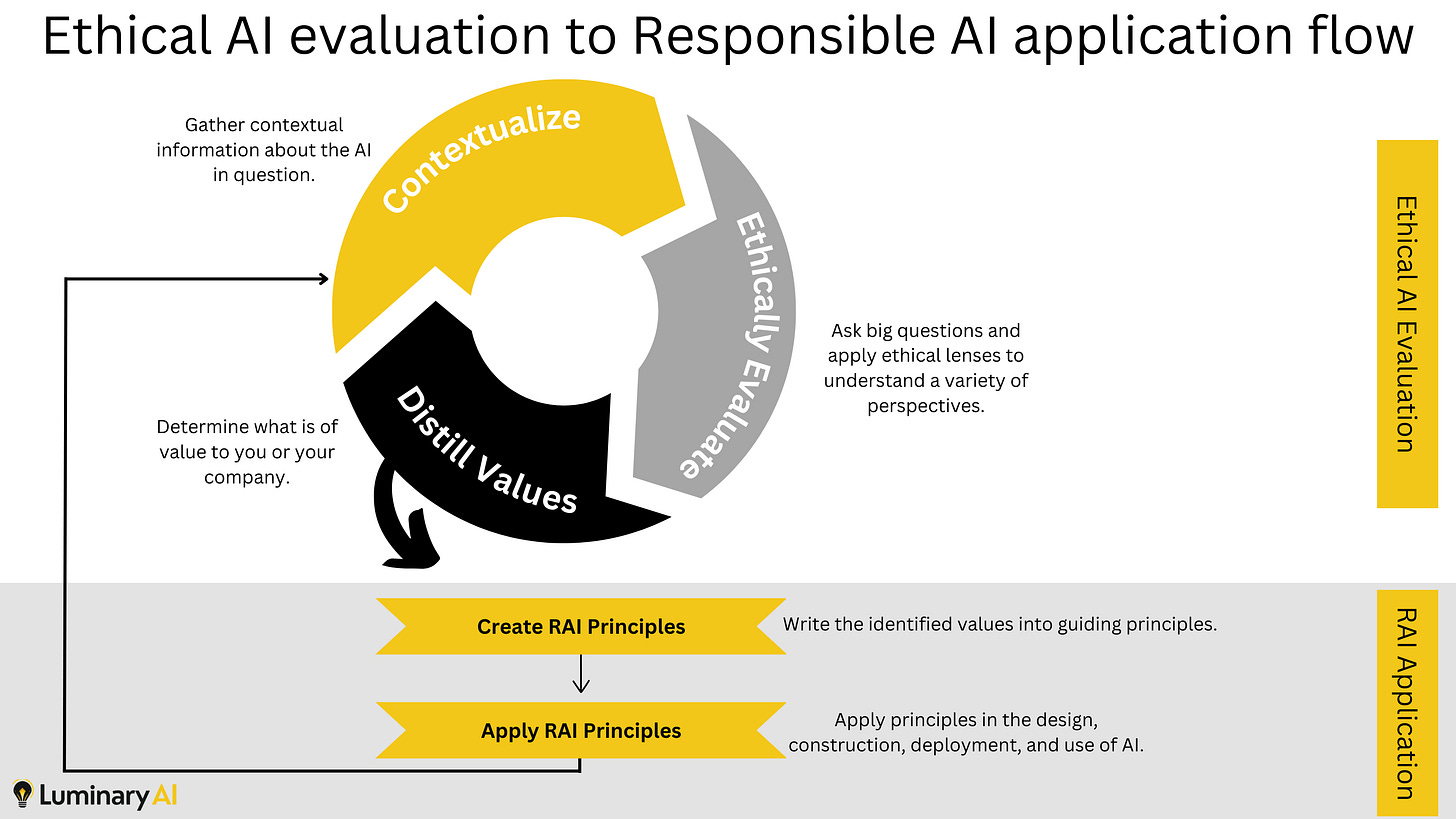

Ethical AI is the research and theoretical consideration of how ethical concepts and values might be applied to artificial intelligence. It’s a field led by curiosity and it’s ever expanding as new applications of AI come to the fore. The field and study of ethical AI gives people the ability to explore concepts within a given context. For example, the ethical considerations for using AI in academia might differ from the ethical considerations for using AI in banking. Each of these areas need to be viewed through an ethical lens with special attention paid to the creator of the AI, use cases, users, potential harm, and more, and ethics provides a lens through which to view these things.

The goal is to have a broad perspective and ask questions from all of those perspectives in order to understand the benefits and risks of the AI. The goal is NOT to “get through the process” or “get to an answer.” Companies or individuals could even decide that they do NOT want ethics to play a role at all, and that’s an ethical decision in and of itself.

Responsible AI is the practical application of the ethical considerations. Once we’ve evaluated the the critical factors and risks through the ethical consideration process, we get to the business of responsible AI. Responsible AI takes into account a set of factors to create a framework, usually comprised of “principles” that guide the creation, use and deployment of the AI.

Here are some of the factors considered when creating or adopting responsible AI principles:

Relevant laws and policies

Risk and benefit to humanity

Long-term positive and negative economic impact (both for the institution and for the county/world)

Climate impact and sustainability

Risk and benefit to customers/users

Risk and benefit to the integrity of the institution in question

Risk and benefit to the brand in question

Company/institution values

This list isn’t comprehensive but it provides a look into the range of items we look at when we work to create responsible AI principles that guide the creation, deployment or use of responsible AI.

I’ve put together a graphic below which showcases how the Ethical AI evaluation, and Responsible AI application processes work together.

If you have questions, or you think something is amiss, let me know! I’d love to hear from you.

Credo AI is the leader in responsible AI for the enterprise sector. With their technology they help enterprises ensure that responsible AI is built into the fabric of their code, technology and business through their Contextual AI Governance Platform Software. I’ve met with a few of Credo’s employees over the past two years and I can confidently say that this team knows their stuff. From frameworks to policy, and policy to principles, they’re well equipped to increase AI ROI and help decrease risk. And yes, they’re hiring.

Tech Leaders are mentioning AI a LOT during earnings calls. (Fox Business)

AI-generated supermodels are impacting the fashion workforce. (NBC News)

AI is impacting a quarter of the workforce. (Bloomberg)

I regularly get asked how people can get into responsible AI, so here are some resources! I’ll keep adding to this list as I come across more information.

Responsible AI Institute is a nonprofit dedicated to helping organizations on their responsible AI journey. They provide awesome ways for their members to get connected through their Slack channel, get resources through their newsletter, and get invited to community events. Plus they’re a leader in responsible AI, so they’re a company to watch.

The Center for AI and Digital Policy offers policy clinics, and they look amazing. If you’re interested in AI policy, this might be for you! I’m hoping to apply for the Fall 2023 session.

Subscribe to my Substack if you want to receive this weekly update.

Connect with me on LinkedIn.

Do you need help on your responsible AI journey? Email me thepaigelord@gmail.com

Follow on TikTok, where I chat about responsible AI.